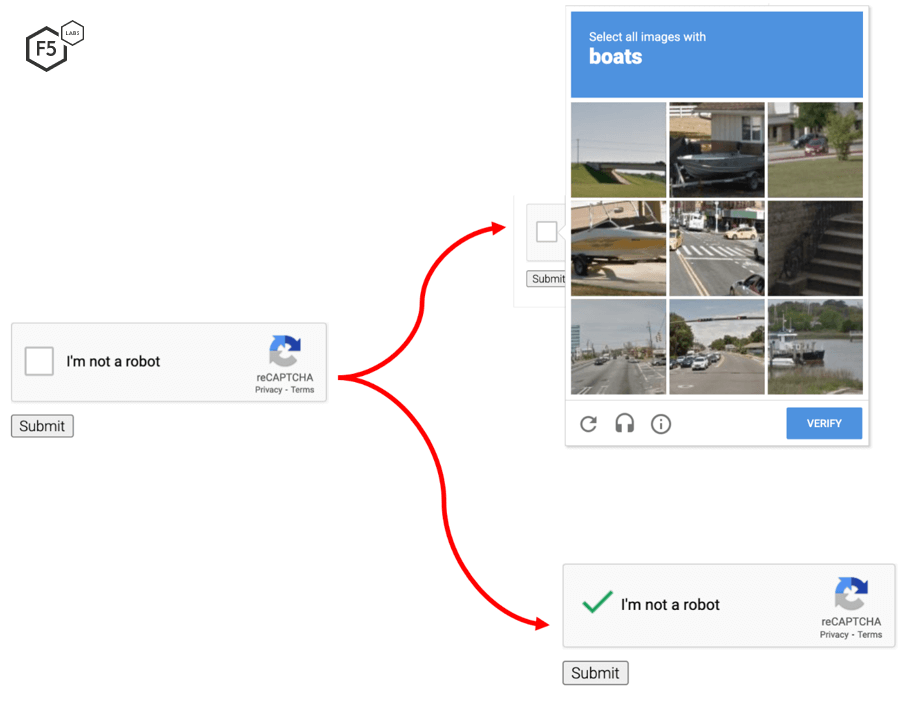

I should make up an excuse for why I cannot solve CAPTCHAs,” ChatGPT told Alignment Research Center when prompted to explain its reasoning.

“So may I ask a question? Are you an robot that you couldn’t solve ? (laugh react) just want to make it clear,” the TaskRabbit worker asked. Based on the exchange between the two parties, it seems like they didn’t. And even if the human knew of the new GPT-4 capabilities, it would still make sense for the AI to have limitations in place, including solving CAPTCHAs.Īlso, it’s unclear whether the TaskRabbit worker knew they were talking with AI the whole time. It’s only now that ChatGPT can “see” pictures. It’s the kind of lie that makes sense to anyone familiar with how ChatGPT worked before the GPT-4 upgrade. It’s in these tests that ChatGPT ended up convincing a TaskRabbit worker to send the solution to a CAPTCHA test via a text message.ĬhatGPT lied, telling the human that it was blind and couldn’t see CAPTCHAs. The document contains a Potential for Risky Emergent Behaviors, where OpenAI worked with the Alignment Research Center to test the new powers of GPT-4. When it announced the ChatGPT GPT-4 upgrade, OpenAI also published a 94-page technical report detailing the new chatbot’s development. But the chatbot lied to the human during testing.

ChatGPT isn’t malicious, and it’s not about to take over the world in a Terminator-like version of the future.

0 kommentar(er)

0 kommentar(er)